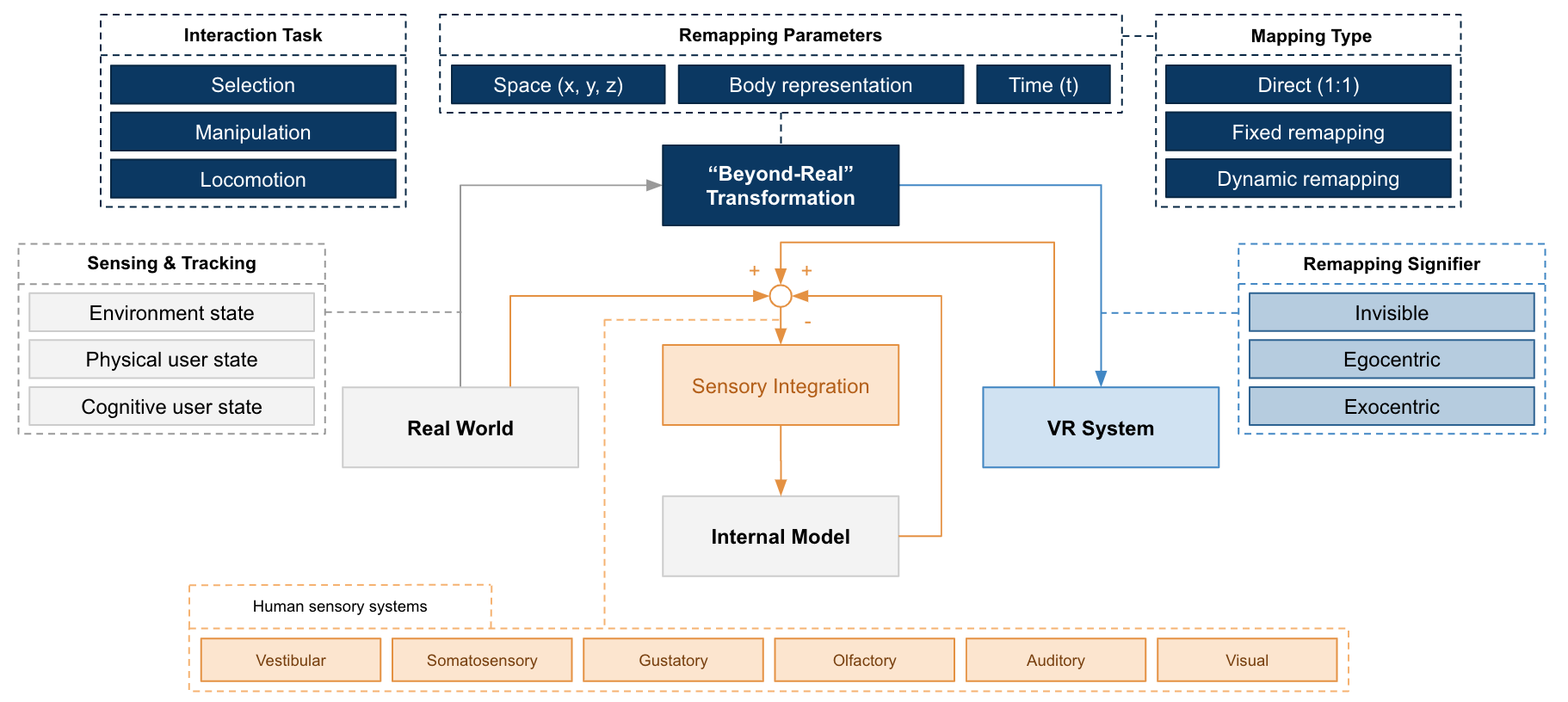

The Beyond Being Real Framework: The VR system receives input from the real world, applies beyond-real transformations, and renders the remapping in VR. Users receive sensory information from both the real world and the VR system which are then integrated. Understanding sensory integration and how the user’s internal model is updated accordingly is integral for exploring open research questions around beyond-real VR interactions.

Abstract

We can create Virtual Reality (VR) interactions that have no equivalent in the real world by remapping spacetime or altering users’ body representation, such as stretching the user’s virtual arm for manipulation of distant objects or scaling up the user’s avatar to enable rapid locomotion. Prior research has leveraged such approaches, what we call beyond-real techniques, to make interactions in VR more practical, efficient, ergonomic, and accessible. We present a survey categorizing prior movement-based VR interaction literature as reality-based, illusory, or beyond-real interactions. We survey relevant conferences (CHI, IEEE VR, VRST, UIST, and DIS) while focusing on selection, manipulation, locomotion, and navigation in VR. For beyond-real interactions, we describe the transformations that have been used by prior works to create novel remappings. We discuss open research questions through the lens of the human sensorimotor control system and highlight challenges that need to be addressed for effective utilization of beyond-real interactions in future VR applications, including plausibility, control, long-term adaptation, and individual differences.

Supplementary Materials

- For the list of papers that were included in our survey, please see Appendix A

- For a walk-through example and more information on how to use our framework, please see Appendix B .