We develop advanced technologies in robotics, mechatronics, and sensing to create interactive, dynamic physical 3D displays and haptic interfaces that allow 3D information to be touched as well as seen. We are specifically interested in using these novel interfaces to support richer remote collaboration, computer aided design, education, and interfaces for people with visual impairments. In pursuit of these goals, we use a design process grounded in iterative prototyping and human centered design and look to create new understanding about human perception and interaction through controlled studies.

Our research in Human Computer Interaction and Human Robot Interaction currently directed in six areas:

- Modeling and Applying Visuo-Haptic Illusions and Multimodal Haptics

- Dissipative Haptic Devices

- Accessible STEM Education Through Haptic and Multimodal Interaction

- Interaction and Display with Swarm User Interfaces

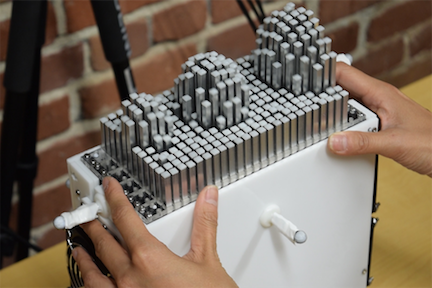

- Shape Changing Robots and Displays

- Design Tools

Shape-shifting Tech Will Change Work As We Know It

See a video of our vision!

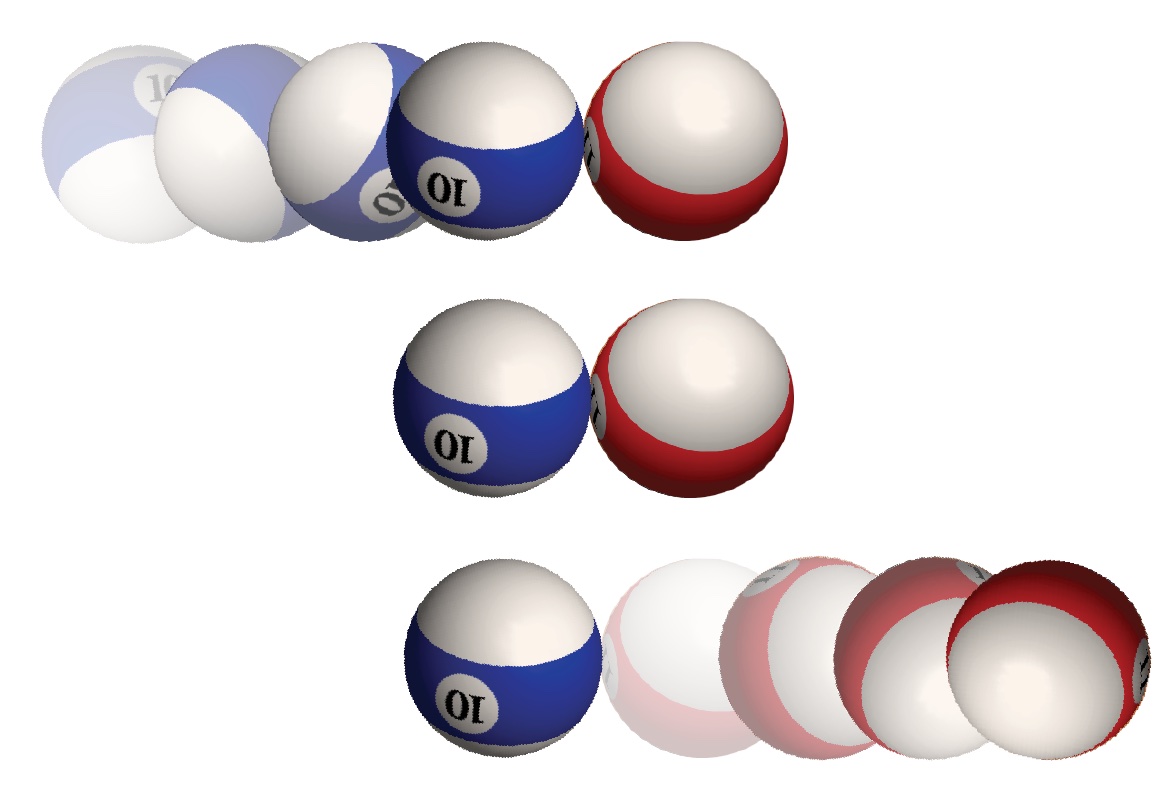

Realism in Phenomenal Causality

The role of realism across multisensory cues within causal perception

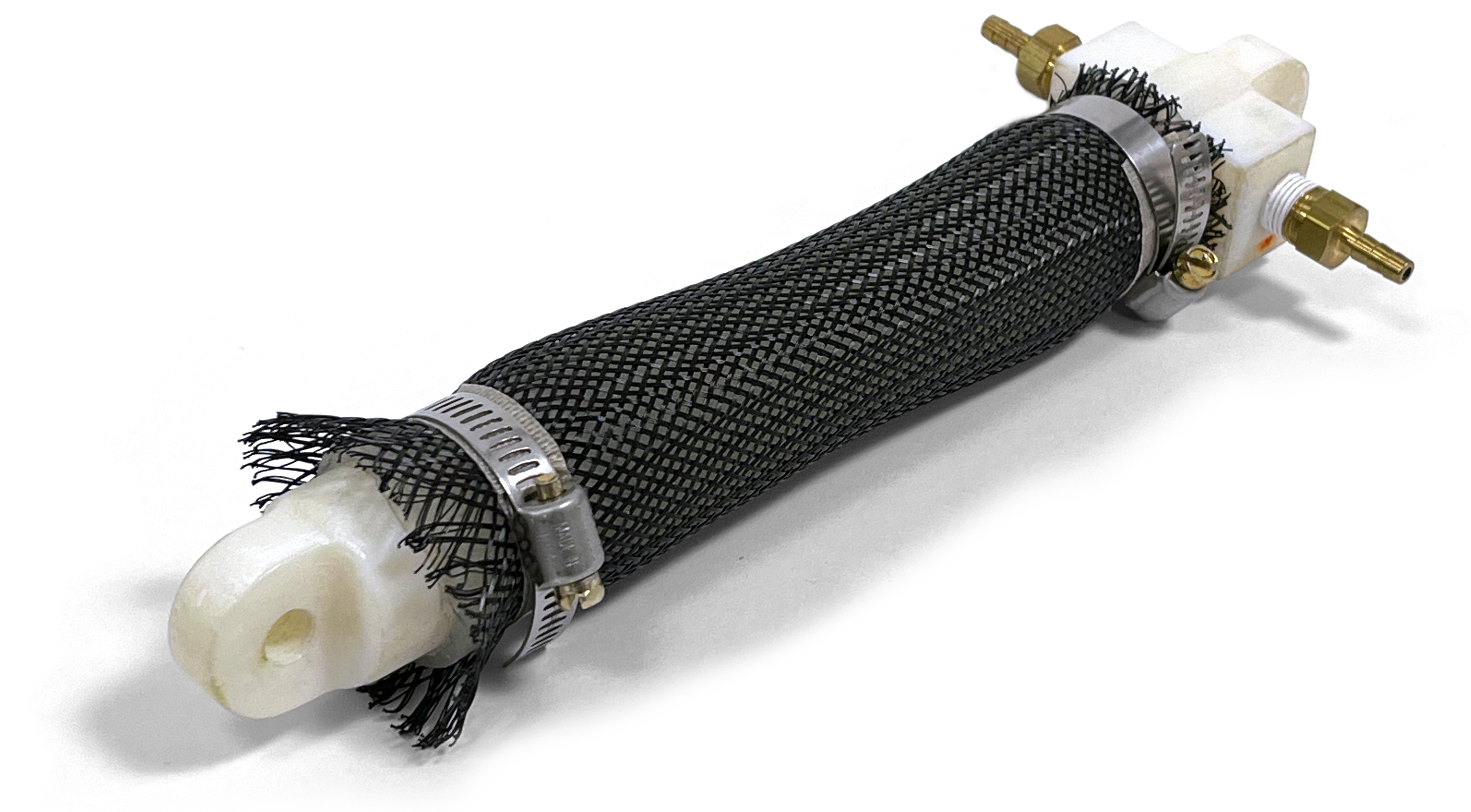

An All-Soft Variable Impedance Actuator Enabled by Embedded Layer Jamming

A multifunctional soft artificial muscle

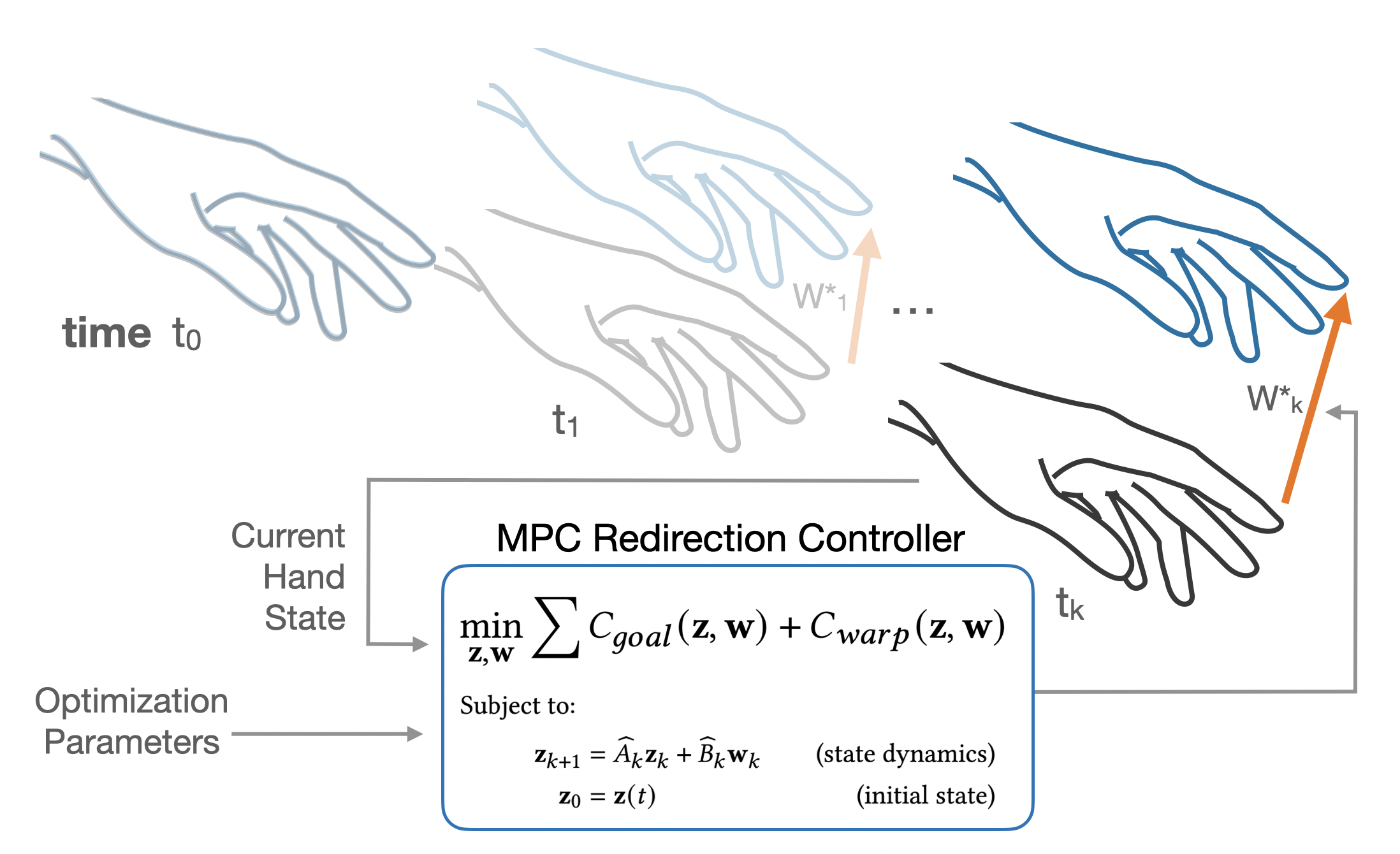

MPC for Reach Redirection

Real-time VR spatial remapping using human sensorimotor models

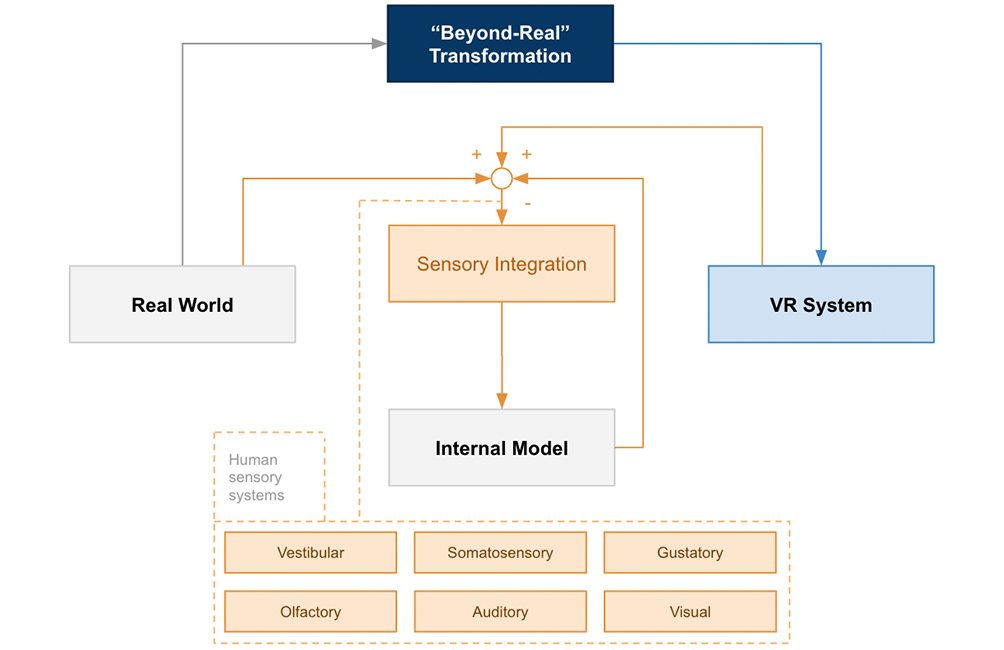

Beyond Being Real

A Sensorimotor Control Perspective on Interactions in Virtual Reality

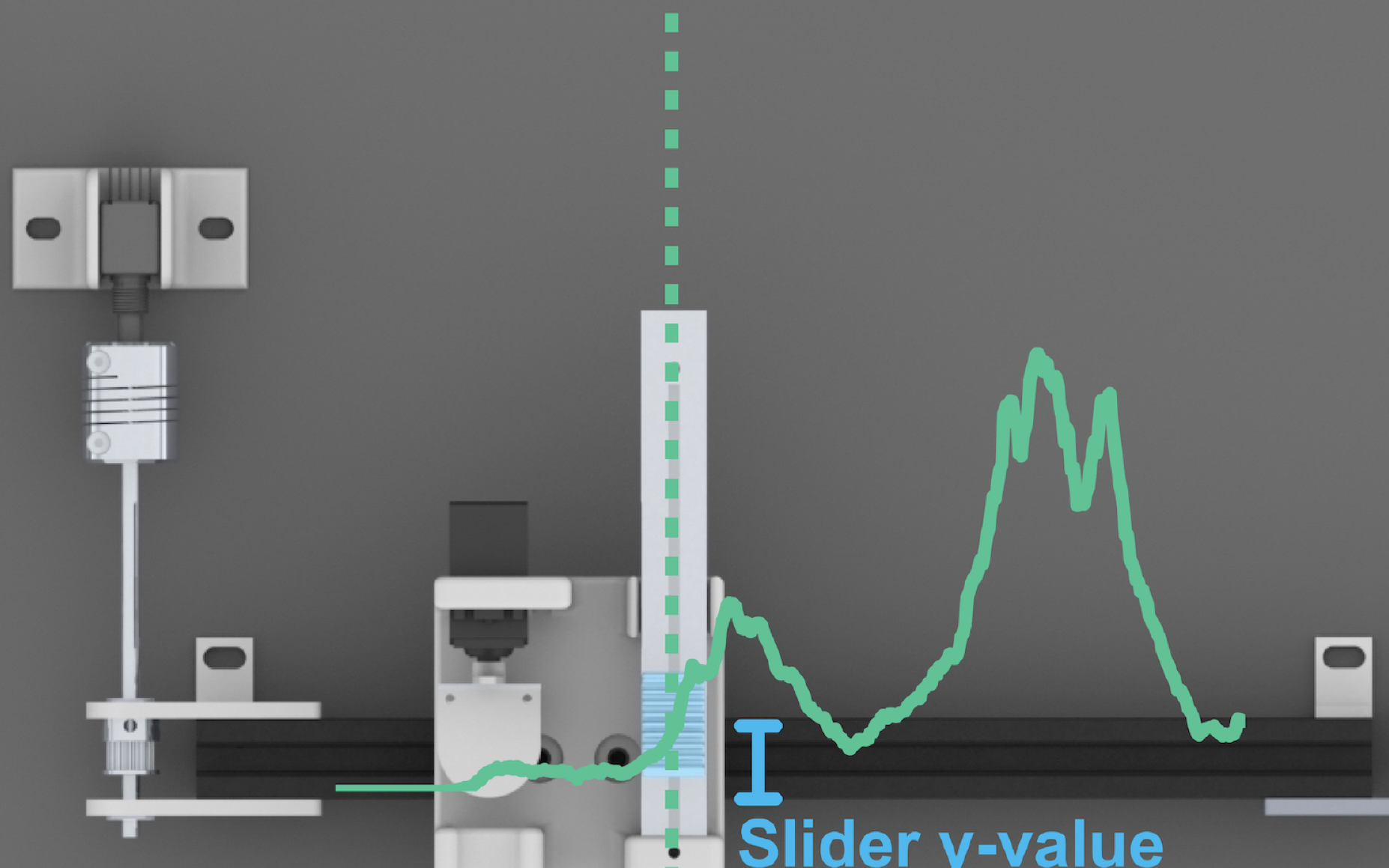

Slide-tone and Tilt-tone

1-DOF Haptic Techniques for Conveying Shape Characteristics of Graphs to Blind Users

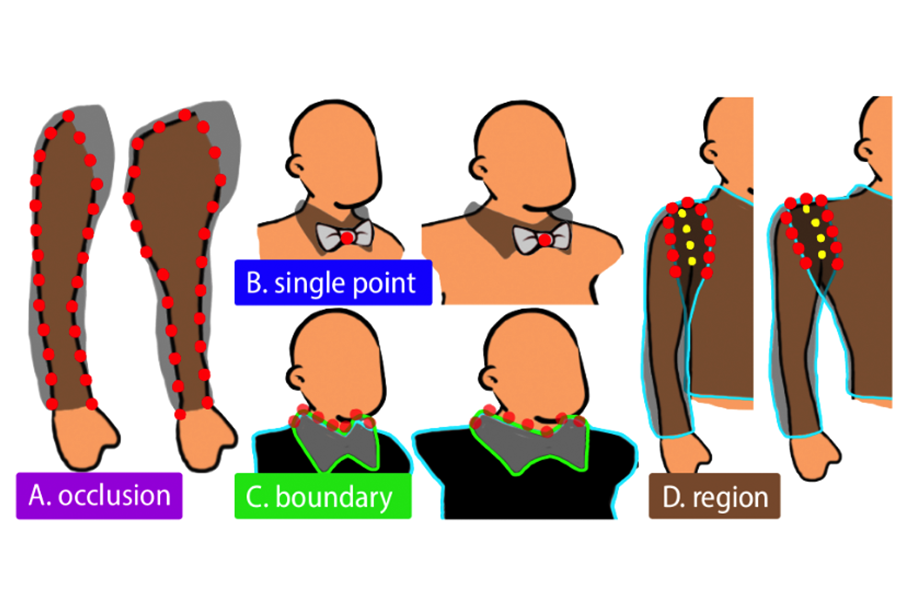

Automated Accessory Rigs

for Layered 2D Character Illustrations

A Causal Feeling

How Kinesthetic Haptics Affects Causal Perception

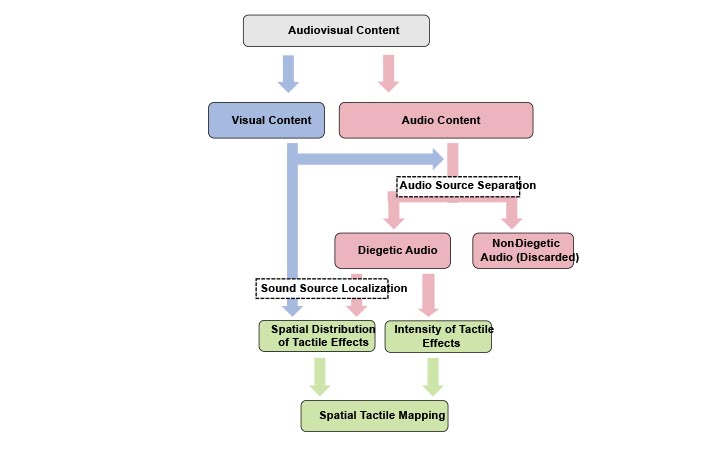

Auto Haptics

Creating spatial tactile effects automatically by analyzing cross-modality features of a video

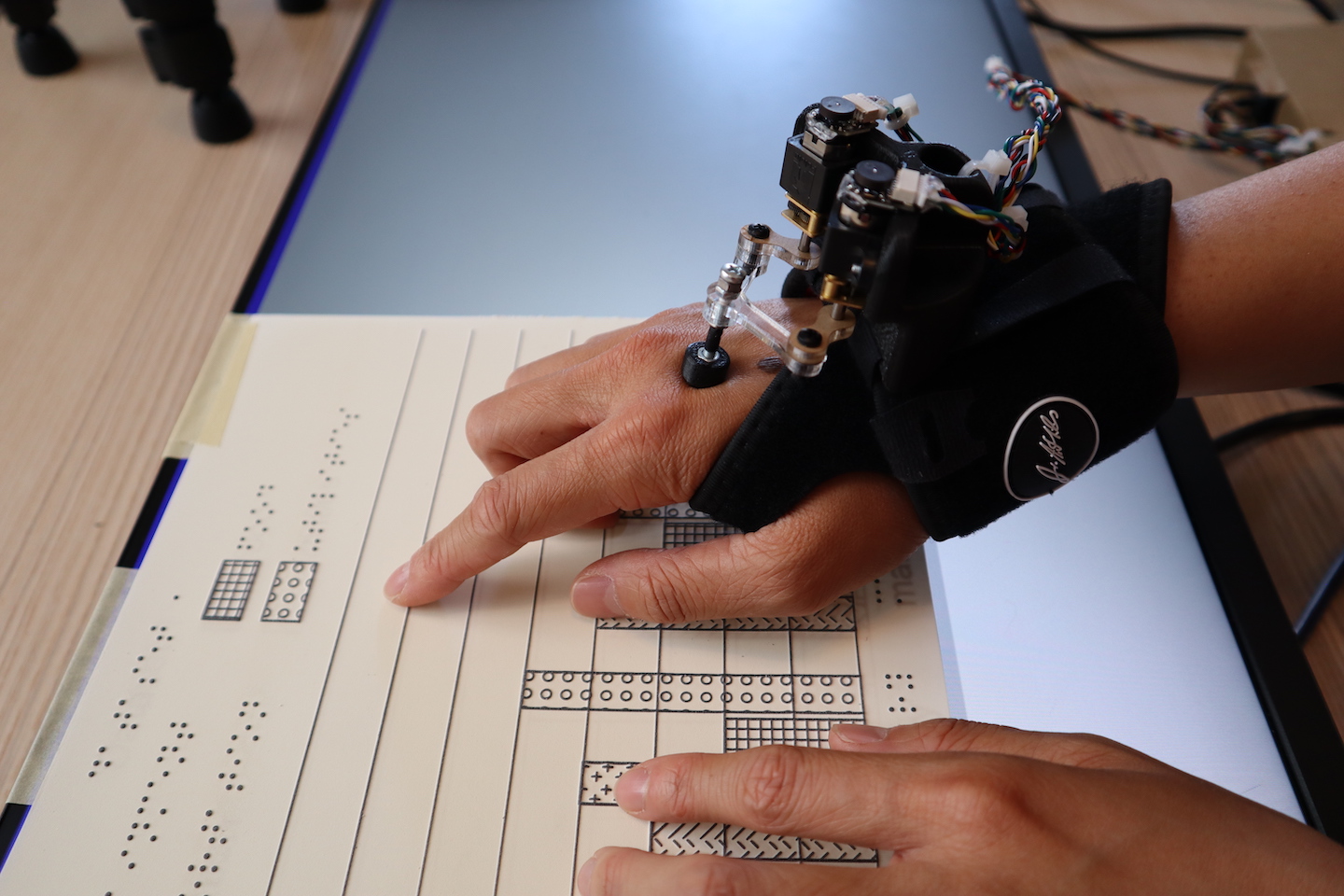

PantoGuide

A Haptic and Audio Guidance System To Support Tactile Graphics Exploration

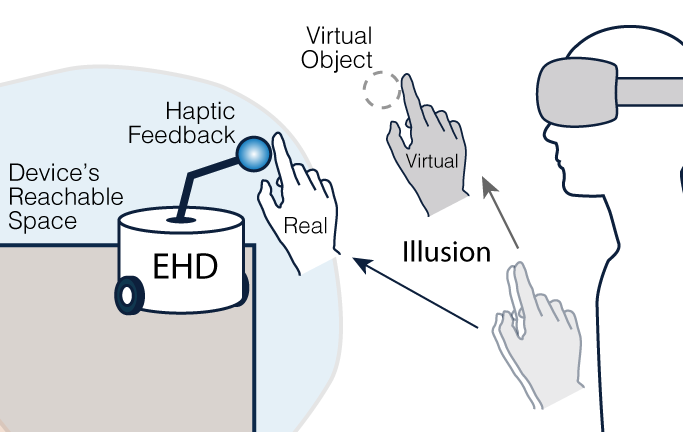

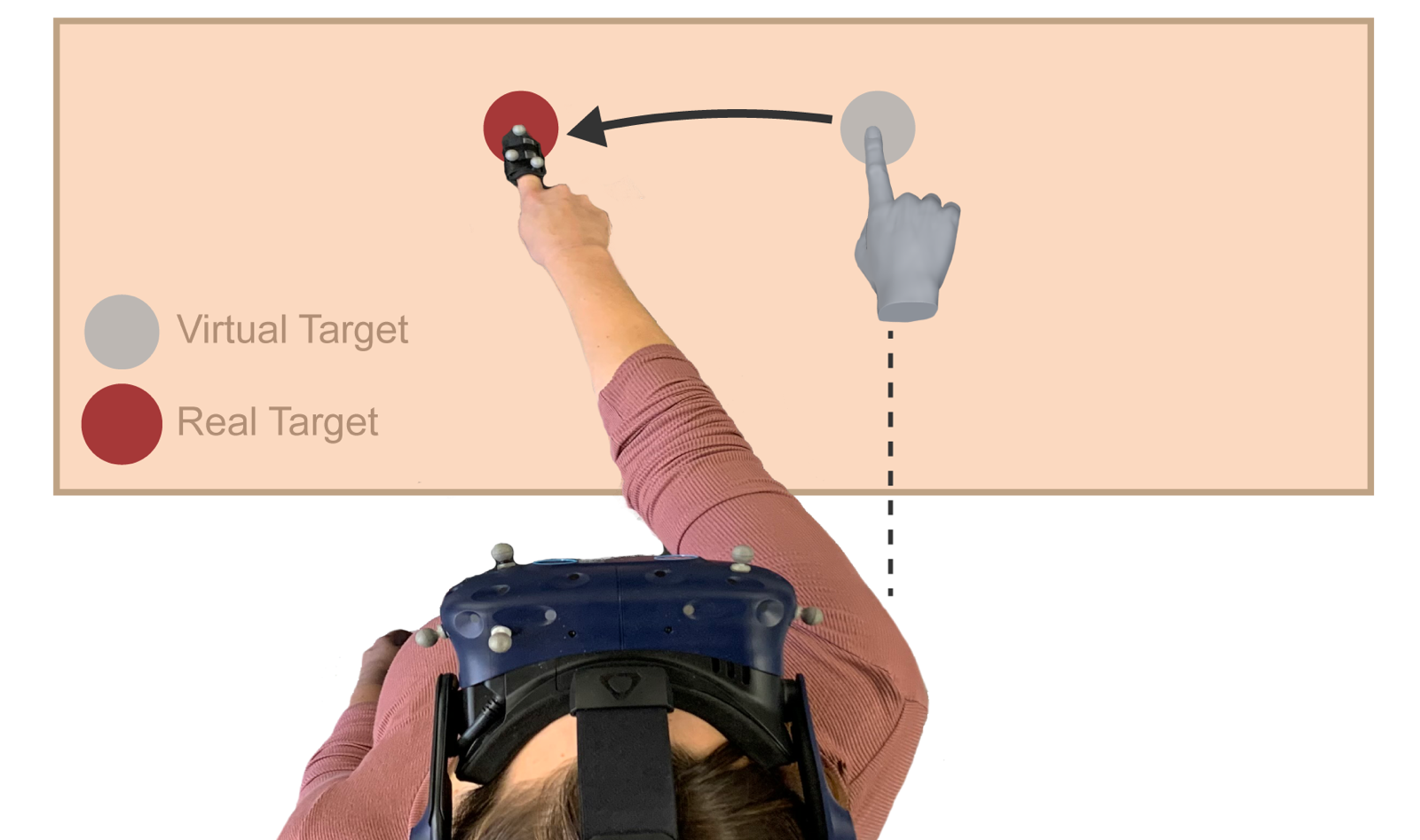

REACH+

Extending the Limits of Haptic Mobile Robots with Redirection in VR

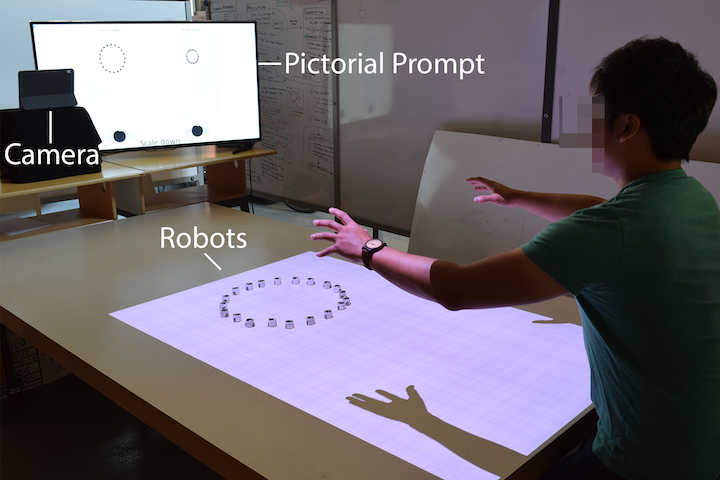

Swarm Control

User-defined Swarm Robot Control

Transient Vibration + Visuo Haptic Illusions

Augmenting Perceived Softness of Haptic Proxy Objects

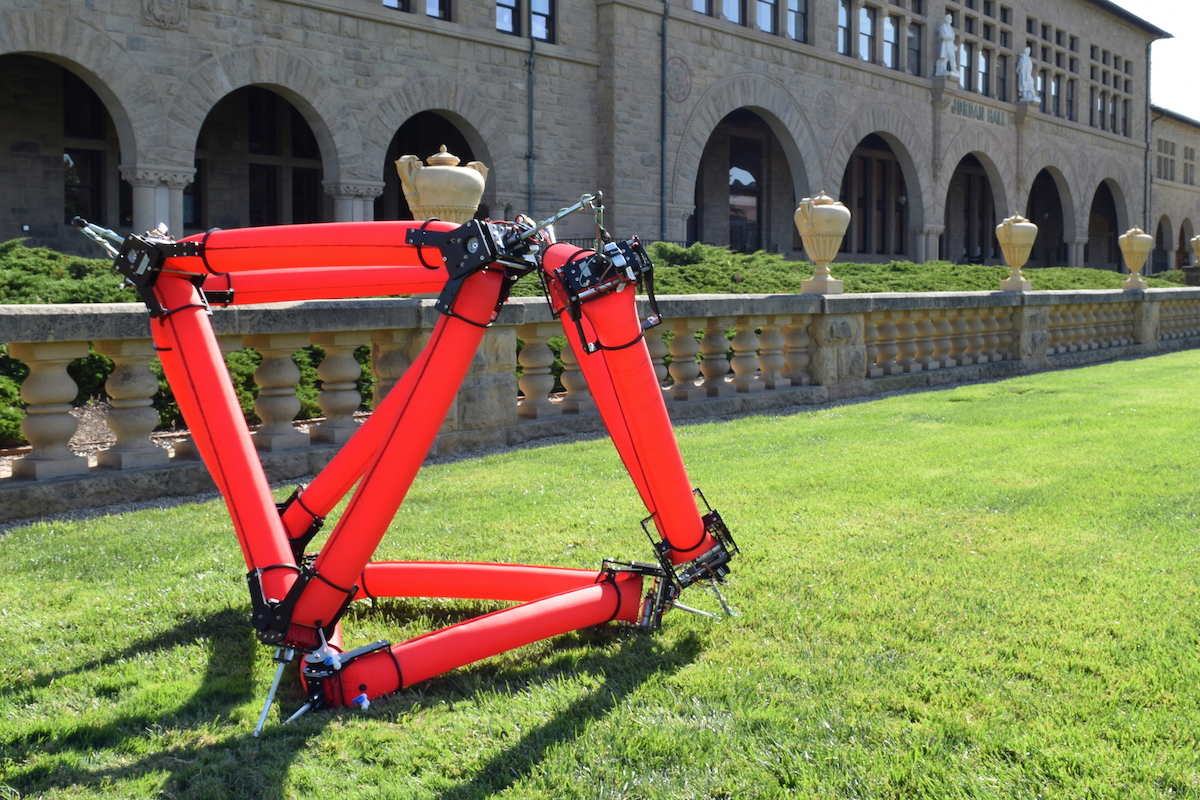

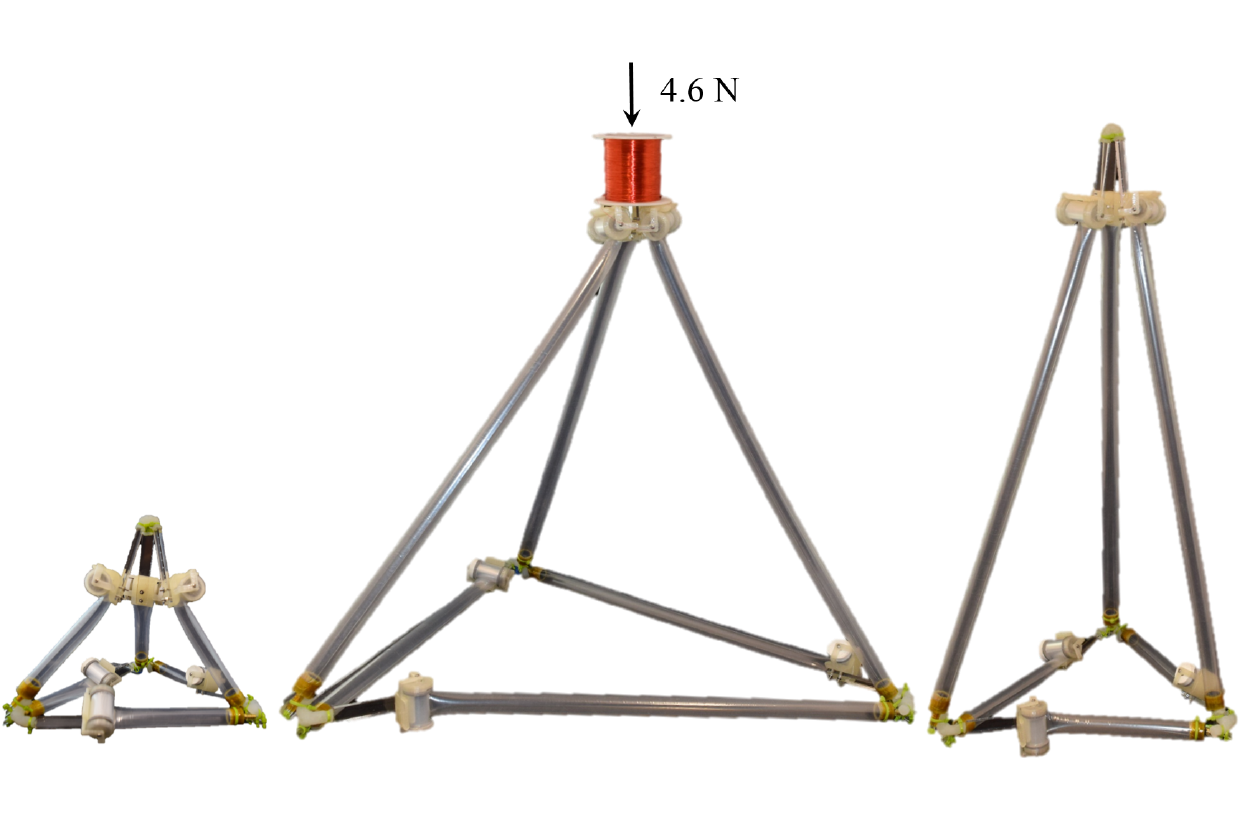

An untethered isoperimetric soft robot

Shape changing truss robots that crawl and engulf.

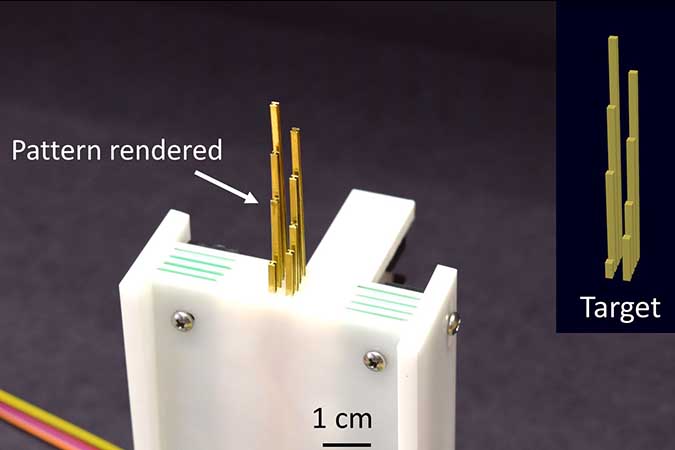

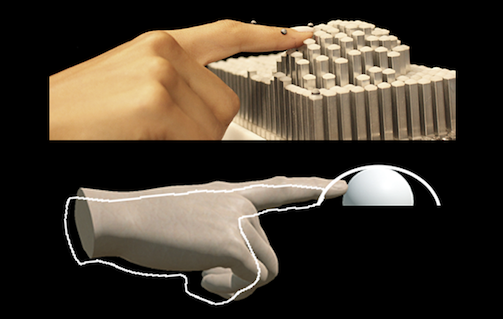

Electrostatic Adhesive Brakes

Towards High Spatial Resolution Refreshable 2.5D Tactile Shape Displays

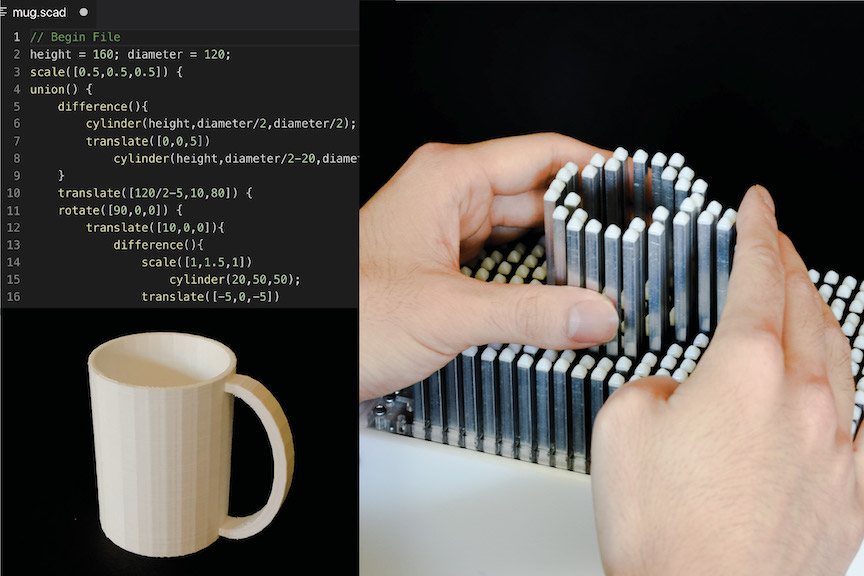

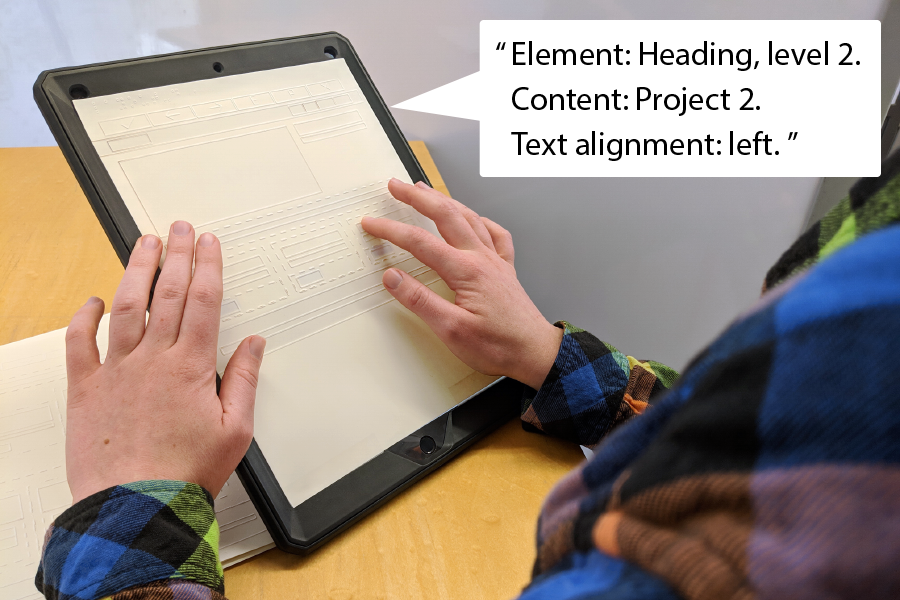

shapeCAD

An Accessible 3D Modelling Workflow for the Blind and Visually-Impaired Via 2.5D Shape Displays

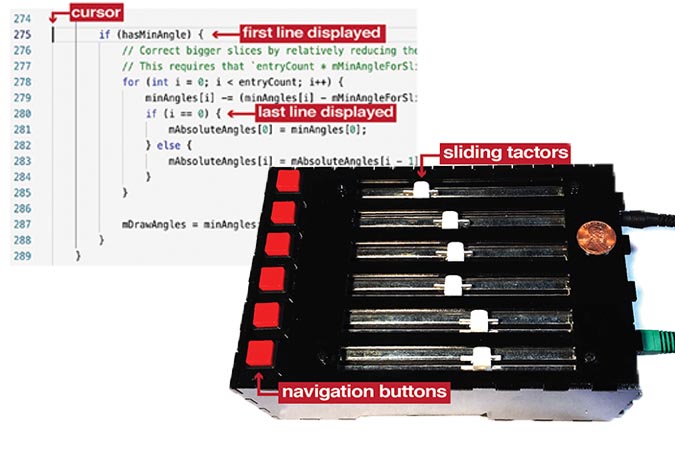

Tactile Code Skimmer

A Tool to Help Blind Programmers Feel the Structure of Code

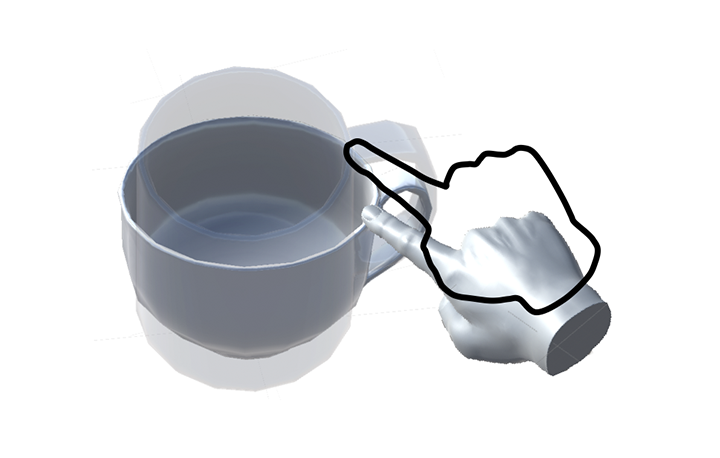

Understanding Redirected Touch In Virtual Reality

Augmenting haptic interaction in VR through perceptual illusions.

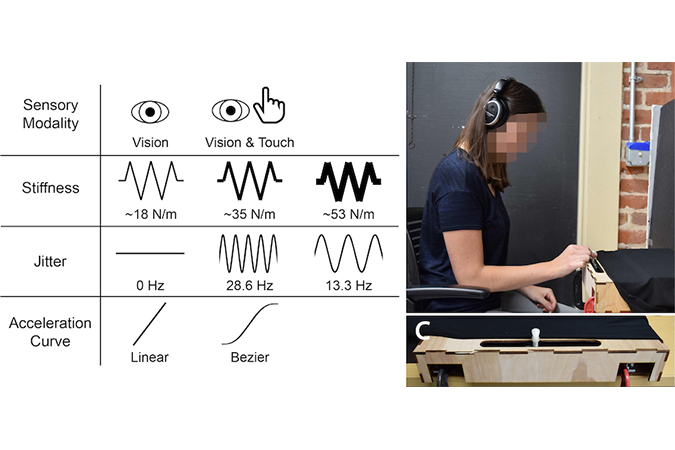

Visual and Haptic Perception of Expressive 1 DoF Motion

Understanding user perception of expressive robotic motion through different sensory modalities

Hover Haptics

Using Quadcopters to Appropriate Objects and the Environment for Haptics in Virtual Reality

SwarmHaptics

Haptic Display with Swarm Robots

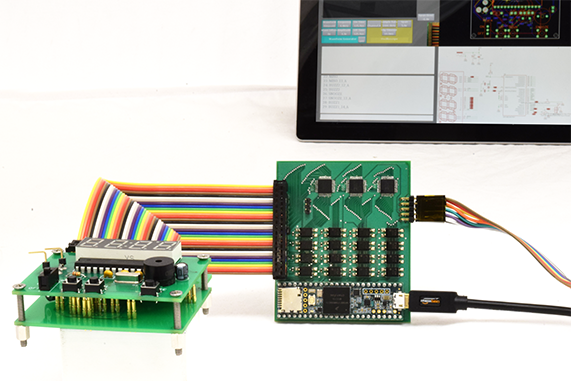

Pinpoint

Automated Instrumentation for In-Circuit PCB Debugging with Dynamic Component Isolation

Editing Spatial Layouts through Tactile Templates

Editing Spatial Layouts through Tactile Templates for People with Visual Impairments

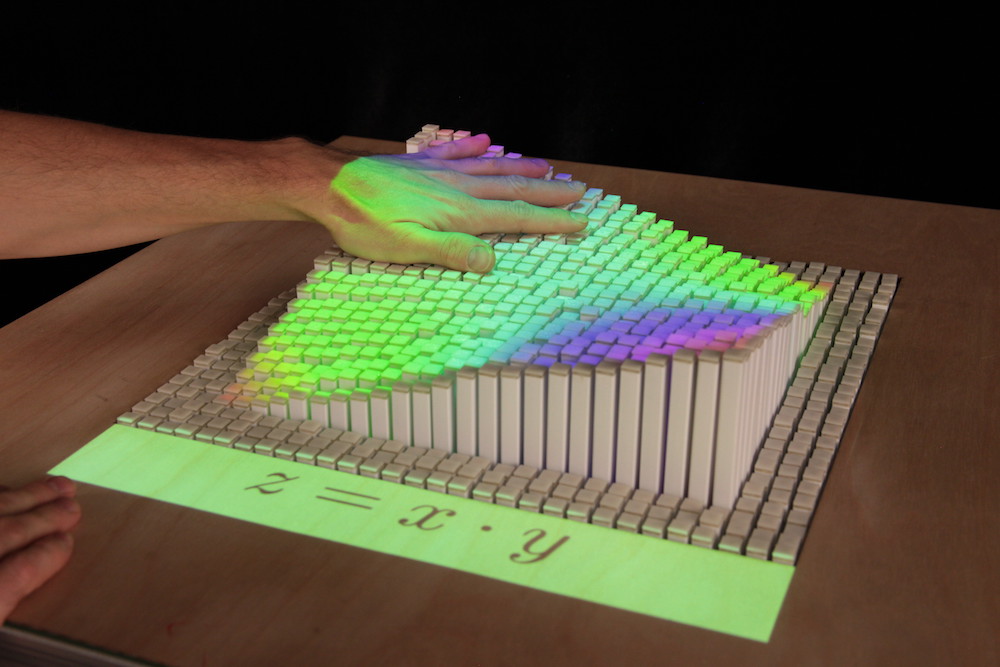

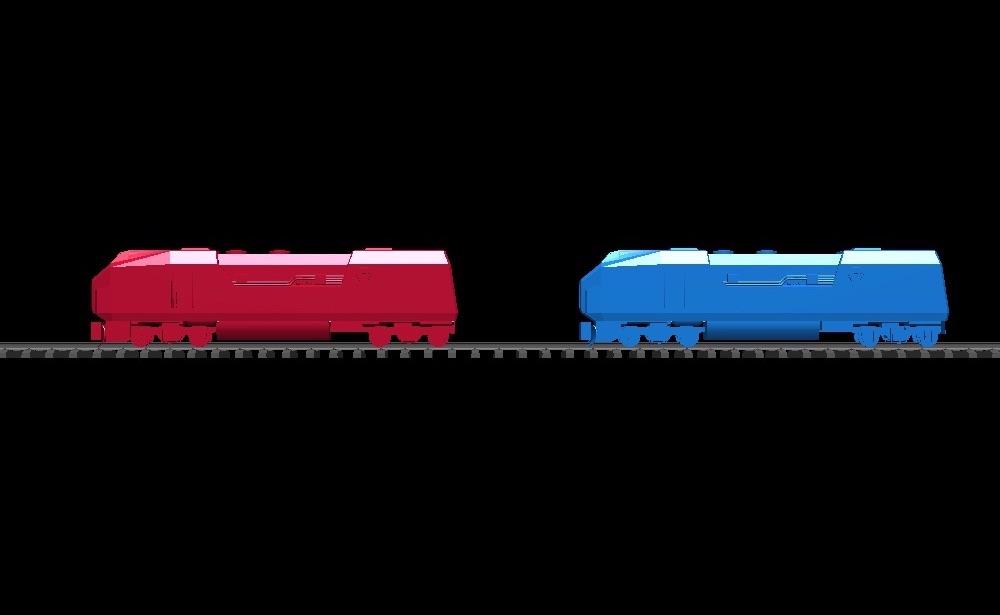

Dynamic Composite Data Physicalization

Physical visualizations that use collections of self-propelled objects to represent data

Visuo-Haptic Illusions

Visuo-Haptic Illusions for Improving the Perceived Performance of Shape Displays

3D Retargeted Touch in Haptics VR

A Functional Optimization Based Approach for Continuous 3D Retargeted Touch of Arbitrary, Complex Boundaries in Haptic Virtual Reality

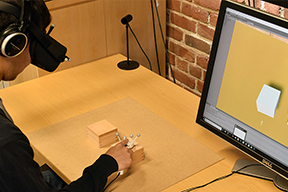

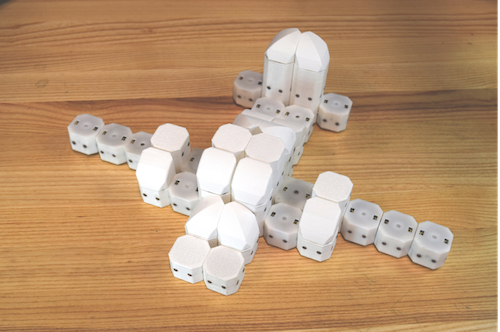

shapeShift

A Mobile Tabletop Shape Display for Tangible and Haptic Interaction

Robotic Assembly

Robotic Assembly of Haptic Proxy Objects for Tangible Interaction and Virtual Reality

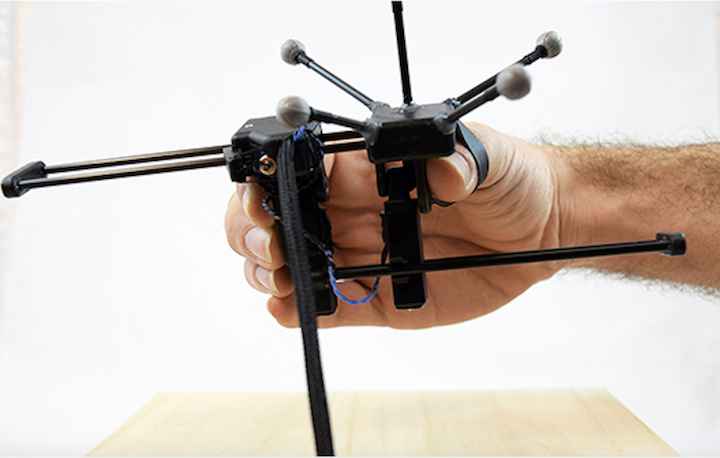

grabity

A Wearable Haptic Interface for Simulating Weight and Grasping in Virtual Reality

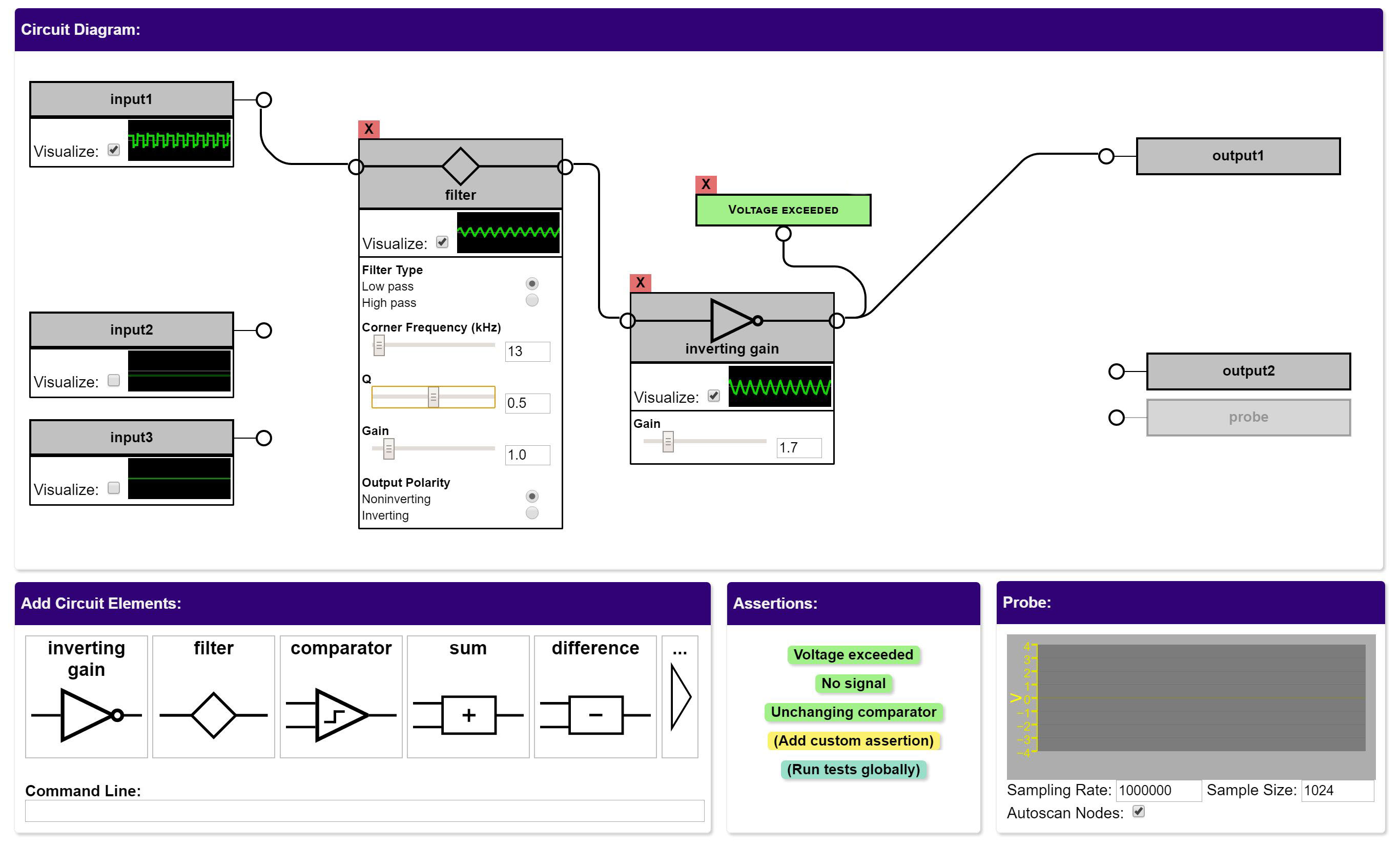

Scanalog

Interactive Design and Debugging of Analog Circuits with Programmable Hardware

UbiSwarm

Ubiquitous Robotic Interfaces and Investigation of Abstract motion as a Display.

Pneumatic Reel Actuator

High extension pneumatic actuator.

shiftIO

Reconfigurable Tactile Elements for dynamic physical controls.

Zooids

Building block for swarm user interface

Wolverine

A wearable haptic interface for grasping in virtual reality.

Rovables

Miniature on-body robots as mobile wearables

Switchable Permanent Magnetic Actuators

Applications in shape change and tactile display

Haptic Edge Display

Display for mobile tactile interaction.